Friday, January 18, 2019

Hello, we’re

Goldie MacDonald, Associate Director for Evaluation in the

Center for Surveillance, Epidemiology, and Laboratory Services at the

Centers for Disease Control and Prevention and

Jeff Engel, Executive Director of the

Council of State and Territorial Epidemiologists. We co-chair the Digital Bridge Evaluation Committee comprised of professionals from state and local health departments, federal and non-governmental organizations, and the private sector.

Better a diamond with a flaw than a pebble without.

—Proverb

In many organizations, it’s easier to access evaluation reports than evaluation plans, especially as personnel or priorities change. Evaluation plans are usually shared with primary stakeholders, but not always disseminated widely. For example, some plans are not available because the content is sensitive or not user-friendly. Nonetheless, sharing an evaluation plan widely can contribute to transparency and richer discussions about evaluation quality earlier in the evaluation process.

One example of sharing an evaluation plan well-beyond primary stakeholders is the

Digital Bridge (DB) multisite evaluation led by the

Public Health Informatics Institute. DB convenes decision makers in health care, public health, and health information technology to address shared information exchange challenges. DB stakeholders developed a multi-jurisdictional approach to

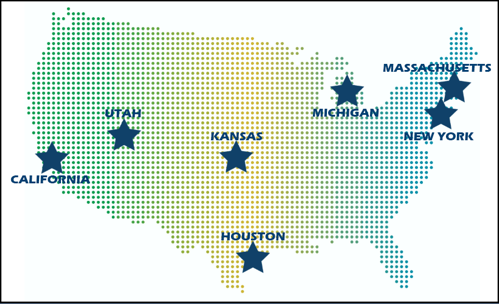

electronic case reporting (eCR) in demonstration sites nationwide. These sites aim to automate transmission of case reports from electronic health records to public health agencies. eCR can result in earlier detection of health-related conditions or events of public concern, more timely intervention, and lowered disease transmission.

(https://digitalbridge.us/infoex/implementation/)

(https://digitalbridge.us/infoex/implementation/)The DB Evaluation Committee led development of an

evaluation plan for these sites and referenced the

Program Evaluation Standards throughout the planning process. Because eCR activities continue to develop and evolve nationwide, we are currently interested in

Standard E1 Evaluation Documentation in the Evaluation Accountability domain

. E1 calls for documentation of evaluation purposes, designs, procedures, data, and outcomes. We realized that the evaluation plan is crucial to this documentation and sharing it widely contributes to dialogue and transparency in meaningful ways. For example, anyone can access the evaluation plan to support current or future eCR activities. Stakeholders and others can consider the quality of the evaluation in more detail as implementation continues. And, posting the plan on a public-facing website paves the way to sharing additional artifacts of the evaluation widely.

Lessons Learned: The author of the proverb above prefers a gemstone with a flaw to a small rock without one

. Stakeholders in this evaluation likely agree, but they surely want to understand any flaws in the evaluation plan. We need to examine an evaluation to determine its merit or worth—Dan Stufflebeam called this the

metaevaluation imperative. An evaluation plan is a key artifact of an evaluation that can be examined even as the evaluation unfolds. For example, stakeholders in this evaluation continue to provide crucial information about practicalities of data collection not addressed in the plan—flaws revealed when discussing the plan prior to implementation. In our case, stakeholders chose the diamond, but transparency and documentation help us to see and understand any flaws on the path to a meaningful evaluation.

Do you have questions, concerns, kudos, or content to extend this aea365 contribution? Please add them in the comments section for this post on the aea365 webpage so that we may enrich our community of practice. Would you like to submit an aea365 Tip? Please send a note of interest to aea365@eval.org. aea365 is sponsored by the American Evaluation Association and provides a Tip-a-Day by and for evaluators.

About AEA

The American Evaluation Association is an international professional association and the largest in its field. Evaluation involves assessing the strengths and weaknesses of programs, policies, personnel, products and organizations to improve their effectiveness. AEA’s mission is to improve evaluation practices and methods worldwide, to increase evaluation use, promote evaluation as a profession and support the contribution of evaluation to the generation of theory and knowledge about effective human action. For more information about AEA, visit www.eval.org.